Addressing Current Limitations

Gemini Diffusion, while promising, currently exhibits some performance gaps compared to other models. It scores lower on scientific reasoning benchmarks like GPQA Diamond and multilingual tests such as the Global MMLU Lite test, suggesting a trade-off between specialized efficiency and broader reasoning capabilities[3]. The model also faces challenges in excelling in reasoning tasks and may need architectural tuning for logic-heavy applications[8]. Integrating structured knowledge bases with AI's pattern recognition could enable models to draw from a wider base of verified information when generating responses[2].

Enhancements in Reasoning and Multilingual Capabilities

To overcome the limitations in reasoning and multilingual understanding, Google is testing an enhanced reasoning mode called Deep Think that considers multiple hypotheses before responding to enable the model to achieve impressive scores on difficult math benchmarks, competition-level coding, and multimodal reasoning[1]. Deep Think represents a significant advancement in AI reasoning capabilities that involves evaluating multiple potential responses before settling on the most optimal answer[4]. The model can also leverage the integration of the LearnLM, which makes it a learning powerhouse[7].

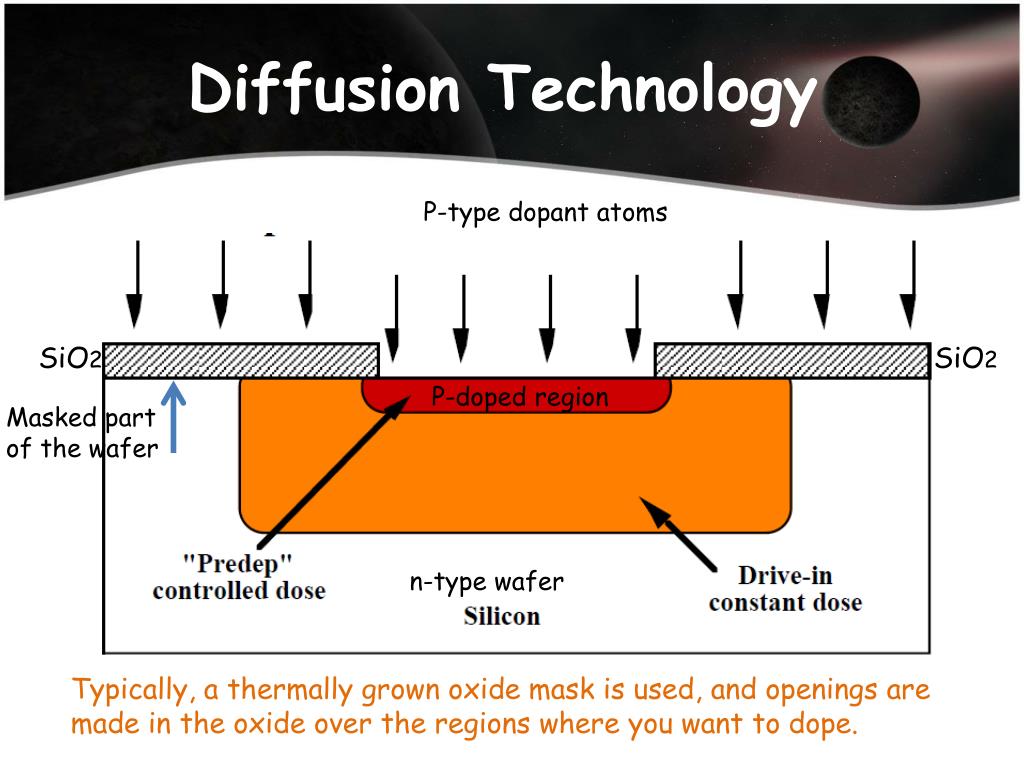

Optimizing for Speed and Efficiency

A primary goal of Gemini Diffusion is to achieve faster text generation with improved coherence and remove the need for 'left to right' text generation[3]. Gemini Diffusion averages 1,479 words per second, and hits 2,000 for coding tasks[3]. It is also 4-5 times quicker than most models like it[3]. Therefore, to boost speed and quality in future rollouts, Google is working on a new text diffusion model, Gemini Diffusion[7]. In addition, Google launched 2.5 Flash with thinking budgets to give developers more control over cost by balancing latency and quality and this capability is extending to 2.5 Pro[1].

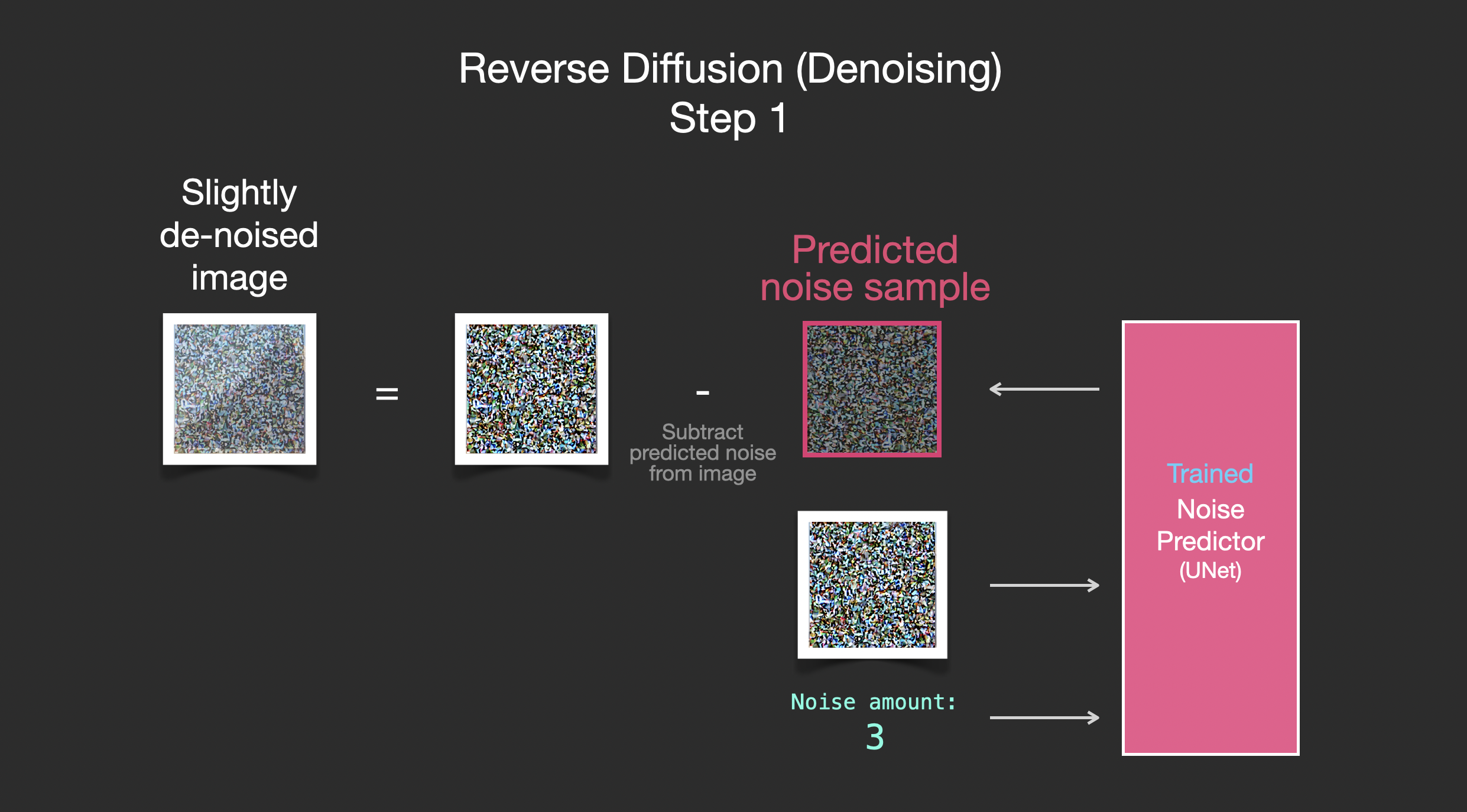

Improving Coherence and Error Correction

Gemini Diffusion refines everything at once, which can make longer outputs more consistent[3]. Its iterative refinement process allows for 'midstream corrections' and the ability to 're-mask the least confident predictions and refine them in later iterations,' improving accuracy during generation[3]. Therefore, to generate coherent text blocks the model refines the entire output through iterative steps[3]. It can also make global adjustments and ensure overall flow during generation[3].

Focusing on Specialized Tasks

While Gemini Diffusion may not be a universal replacement, but rather a highly effective tool for specific, demanding applications where speed and iterative correction are paramount[3]. Its proficiency in code generation and editing is a hot topic, with users noting its knack for refactoring HTML or renaming variables in shaders with impressive speed and accuracy[3]. In order to provide the model for coding and others, it's worth noting that the products themselves are going to evolve massively over the next year or two, aiming for a universal assistant that can seamlessly operate over any domain, any modality or any device[6].

Ethical Considerations and Transparency

Efforts to mitigate the limitations of Gemini and similar models are underway, with research focusing on several key areas including enhanced training techniques to improve the model’s accuracy and reduce the incidence of hallucinations[2]. It is important to enhance the model’s transparency, so users can understand how it arrived at a particular output[2]. This involves developing methods to trace the model’s reasoning process, making it easier to identify and correct biases or errors in the model’s outputs[2].

Leveraging Multimodal Capabilities

Gemini distinguishes itself not merely as an iteration of existing models but as a beacon of multimodal understanding, setting a new standard in the field[2]. What sets Gemini apart is its unparalleled multimodal capabilities, a feat that marks a departure from traditional text-centric models[2]. Gemini is engineered to understand and generate content across a spectrum of inputs and outputs, from the written word to images, sounds, and moving pictures[2]. Ensuring the quality and safety of this dataset is paramount, using rigorous data curation processes vet the content for accuracy, relevance, and appropriateness[2].

Need for Sophisticated Native Capabilities

Equipping Gemini with more sophisticated native capabilities in the areas such as Enhanced Memory/Context Models, Native Pattern Analysis Tools, Integrated Ethical Framework Controls, and Context-Aware Response Modes would unlock tremendous potential for advanced research, development, and creative collaboration[5]. Therefore, it is recommended that the Google AI team consider investigating features such as options for more structured, long-term conceptual memory beyond the standard context window, perhaps akin to dynamic knowledge graphs[5].

Get more accurate answers with Super Search, upload files, personalized discovery feed, save searches and contribute to the PandiPedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.