Discover Pandipedia

Pandipedia is the world's first encyclopaedia of machine generated content approved by humans. You can contribute by simply searching and clicking/tapping on "Add To Pandipedia" in the answer you like. Learn More

Expand the world's knowledge as you search and help others. Go you!

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Gemini Diffusion has several limitations. It shows weaknesses in reasoning tasks, scoring notably lower on benchmarks like the BIG-Bench Extra Hard reasoning test compared to other models, indicating a potential need for architectural tuning in logic-heavy applications[1][5]. Additionally, while it performs well in coding and editing tasks, its speed can be offset by the computational demands of processing long context windows, as diffusion models require recalculating attention for the entire context each pass, leading to higher computational costs[2].

Moreover, it inherits biases from training data and may produce inaccurate or unclear responses. This raises concerns about its reliability and the need for careful use in contexts requiring factual accuracy[3][4].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Get more accurate answers with Super Search, upload files, personalised discovery feed, save searches and contribute to the PandiPedia.

:max_bytes(150000):strip_icc()/GettyImages-1293478400-948e20ad838a4d70a03aca2a969c0552.jpg)

Journaling is significant as it provides emotional and cognitive benefits, including stress relief, increased self-awareness, and mindfulness. It allows individuals to express their thoughts and feelings, promoting clarity and emotional processing, which can enhance mental well-being and reduce symptoms of anxiety and depression[1][2][4].

Moreover, it serves as a tool for self-discovery, helping people reconnect with their personal experiences and identify patterns in their emotions[3][5]. Research also indicates that journaling can improve memory, boost communication skills, and even strengthen the immune system[6]. Thus, incorporating journaling into daily routines can foster resilience and personal growth.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Qwen2 is being developed by the Qwen team, which is part of Alibaba Cloud. This team has focused on advancing large language models, building on the foundation laid by its predecessor, Qwen1.5, to produce significant improvements in performance and multilingual capabilities[2][3].

The Qwen series, including Qwen2, emphasizes open-source development and community engagement, with resources and models available on platforms like Hugging Face and ModelScope[1][3].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Nupedia was an English-language online encyclopedia[1] founded by Jimmy Wales[1] in 1999, with a goal of creating high-quality articles written by experts and approved through a seven-step review process. It operated until 2003 and is best known as the predecessor of Wikipedia. Nupedia had a peer-review process and was not a wiki, requiring scholarly volunteer contributions and aimed for content comparable to professional encyclopedias. However, it struggled to attract contributors and ultimately declined in relevance due to the success and development of Wikipedia.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Owala FreeSip Insulated Stainless Steel Water Bottle

Known for its spill-proof design and versatile drinking options, this water bottle has become a must-have[2][3].

Lululemon Everywhere Belt Bag

A trendy accessory providing a stylish way to carry essentials, praised for its practical design and popularity among various age groups[1][3].

Theragun Pro Plus

A top multifunction massage gun designed for muscle relief and recovery, with multiple attachment options[4].

Hatch Restore 2

A sleep aid device that combines an alarm clock, sunset lamp, and sound machine to help with sleep routines[3].

Amazon Kindle Paperwhite

A lightweight e-reader perfect for book lovers, housing a large library within its sleek design[2][3].

3Doodler Flow 3D Printing Pen

A creative tool ranked as the best for beginners, providing hours of entertainment through 3D printing[1][4].

Custom Neon Sign

A personalized decor piece available in various colors and fonts, loved for its quality and customization options[1].

Apple AirPods Max

Premium headphones featuring immersive sound quality and noise cancellation, highly recommended for music lovers[2][3].

Hatch Restore 2

A multifunctional device that also helps with sleep hygiene through customizable wind-down and wake-up routines[3].

Cozy Earth Cuddle Blanket

A luxurious throw blanket favored for its softness and ideal for cozy evenings[4].

Biib 9-in-1 Multitool Pen

A versatile pen featuring multiple functions, making it a practical gift option[1].

Nike Killshot Glitter Swoosh

A stylish sneaker combining classic design with modern glitter accents, suitable for casual wear[4].

Summer Fridays Holiday Lip Butter Balm Set

A popular lip balm set that has garnered attention for its hydrating properties and adorable packaging[2].

Kitsch Satin Heatless Curling Set

A growing trend in hairstyling kits that allows for heat-free curls overnight, promoting hair health[2].

Harry & David Grand Signature Gift Basket

A beloved gift basket filled with sweet and savory snacks, making it a great present for various occasions[4].

Glossier You Rêve

A new fragrance from Glossier that's a perfect gift for both perfume novices and aficionados[2].

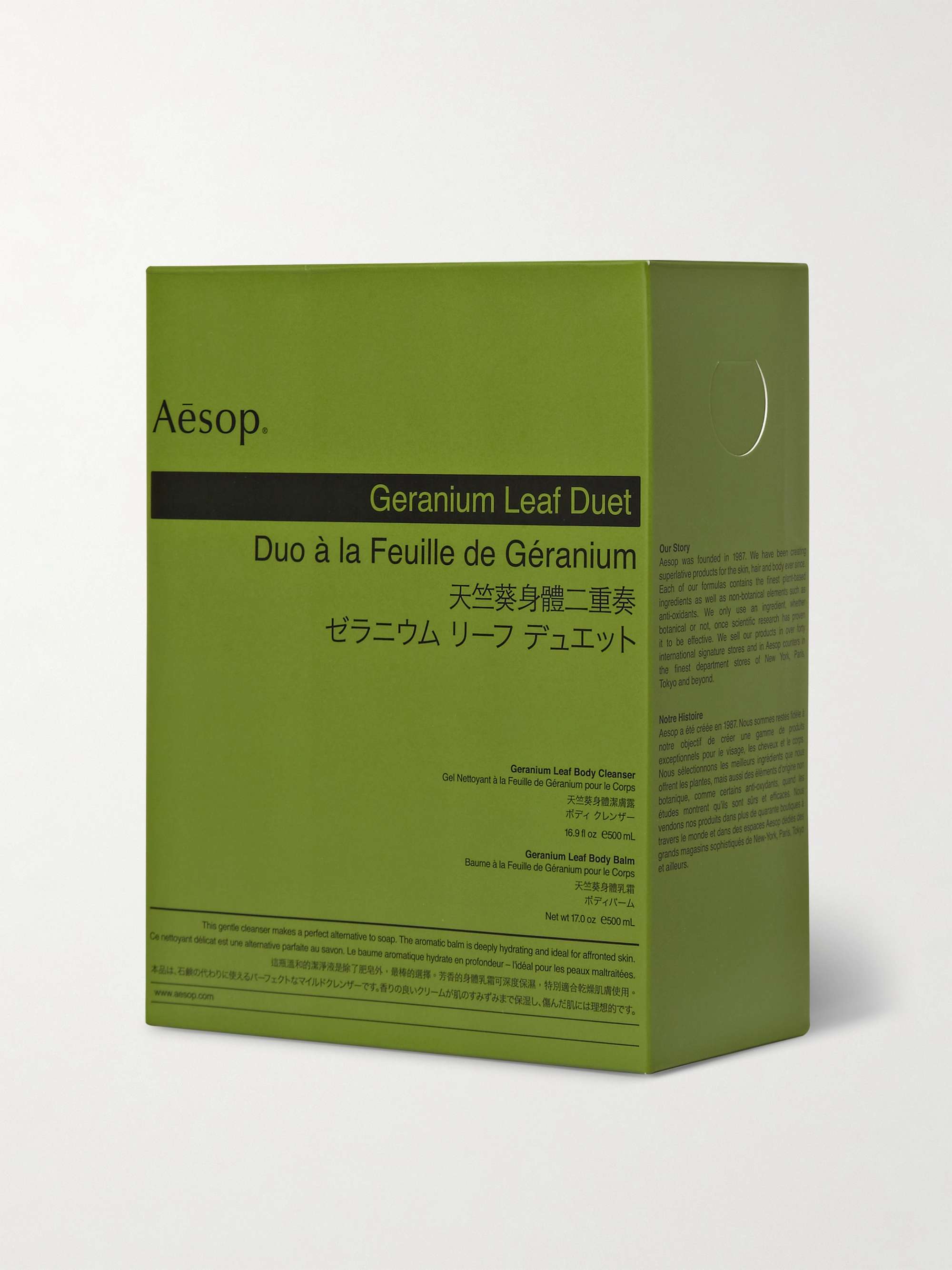

Aesom Geranium Leaf Body Cleanser and Balm Set

A luxurious body care set with a fresh feeling and great scent, ideal for self-care gifting[3].

Eberjey Gisele Modal Short PJ Set

Soft and stylish pajamas that are a crowd-pleaser when it comes to comfy loungewear gifts[2].

Mark and Graham Travel Jewelry Case

A personalized jewelry case ideal for travel, offering a practical design with a built-in mirror[2].

Cuyana Travel Case Set

A chic travel case set designed for organization, customizable with initials for a personal touch[4].

Polaroid Instant Camera

An instant print camera perfect for capturing memories in the moment during social gatherings[3].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Get more accurate answers with Super Search, upload files, personalised discovery feed, save searches and contribute to the PandiPedia.

The AT Protocol, also known as the Authenticated Transfer Protocol or ATProto, is a decentralized protocol designed for large-scale social web applications, addressing issues related to interoperability, discoverability, scalability, and data portability within decentralized social networks. It was initially developed as the Authenticated Data Experiment (ADX) within Twitter and seeks to facilitate more efficient communication among different social networking services by employing a federated architecture rather than relying on monolithic servers[1][5].

Users within the AT Protocol have permanent decentralized identifiers (DIDs) and configurable domain names that serve as human-readable handles. Their data is stored in signed data repositories, which include various types of records such as posts and comments[2][6]. The protocol comprises three core components: Personal Data Servers (PDS), which host user data; Relays, which act as indexing mechanisms by collecting and forwarding updates; and App Views, which serve as end-user platforms interfacing with the network[6][5].

The AT Protocol uses a schema-based framework called Lexicon to standardize interactions between different services, allowing for greater flexibility and interoperation, similar to how web servers communicate through established protocols[4][5]. Additionally, it emphasizes account portability, allowing users to migrate their accounts between providers without losing their data or social connections[4].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Introduction to the Research

In a groundbreaking study, researchers Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton trained a deep convolutional neural network (CNN) to classify over 1.2 million high-resolution images from the ImageNet database, spanning 1,000 different categories. Their work significantly advanced image classification accuracy, achieving a top-1 error rate of 37.5% and a top-5 error rate of 17.0%, outperforming previous state-of-the-art methods by a notable margin[1].

The Neural Network Architecture

The architecture of the developed CNN is complex, consisting of five convolutional layers followed by three fully-connected layers. The model includes more than 60 million parameters, making it one of the largest neural networks trained on ImageNet at the time. To maximize training efficiency, the researchers employed GPU implementation of 2D convolution and innovative techniques like dropout to reduce overfitting[1].

The architecture can be summarized as follows:

Convolutional Layers: These layers extract features from the input images, helping the network learn patterns essential for classification.

Max Pooling Layers: These are used to reduce the spatial dimensions of the feature maps, retaining essential information while reducing computational load[1].

Fully-Connected Layers: They integrate the features learned in the convolutional layers to produce the final classification output.

Training and Regularization Techniques

To optimize the network's performance and prevent overfitting, several effective strategies were implemented during training:

Data Augmentation: The researchers expanded the training dataset using random 224x224 pixel patches and horizontal reflections, enhancing the model's ability to generalize from limited data[1].

Dropout: This novel technique involved randomly setting a portion of hidden neurons to zero during training. By doing so, the network learned to rely on various subsets of neurons, improving robustness and reducing overfitting[1].

Local Response Normalization: This process helps to enhance feature representation by normalizing the response of the neurons, aiding in better generalization during training[1].

Results and Performance

The deep CNN achieved remarkable results in classification tasks, demonstrating that using a network of this size could lead to unprecedented accuracies in image processing. In the ILSVRC-2012 competition, they fine-tuned their model to classify the entire ImageNet 2011 validation set, obtaining an error rate of 15.3%. This performance was significantly better than other competing models, which achieved a top-5 error rate of 26.2%[1].

The researchers also noted the importance of the model's depth. They observed that reducing the number of convolutional layers negatively impacted performance, illustrating the significance of a deeper architecture for improved accuracy[1].

Visual Insights from the Model

To qualitatively evaluate the CNN's performance, images from the test set were examined based on top-5 predictions. The model often recognized off-center objects accurately. However, there was some ambiguity with certain images, indicating that additional training with more variable datasets could enhance accuracy further[1].

An interesting observation from their analysis was how the trained model could retrieve similar images based on feature vectors. By using the Euclidean distance between feature vectors, the researchers could identify related images, demonstrating the model's understanding of visual similarities[1].

Future Directions

While the results showcased the capabilities of deep learning in image classification, the authors acknowledged that the network's performance could further improve with more extensive training and architectural refinements. They hinted at the potential for future work to explore different architectures and training datasets to enhance model performance[1].

Additionally, as advancements in computational power and methodologies continue, larger architectures may become feasible, enabling even deeper networks for more complex image classification tasks[1].

Conclusion

The study on deep convolutional neural networks for ImageNet classification represents a significant milestone in the field of computer vision. By effectively combining strategies like dropout, data augmentation, and advanced training methods, the researchers set new standards for performance in image classification tasks. This research not only highlights the potential of deep learning but also opens doors for future innovations in artificial intelligence and machine learning applications[1].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Google's generally a second-price auction. So each spot higher in the order is more expensive.

Mr. Hurst[5]

In a first-price auction, an auction winner will be charged exactly what they had submitted as their bid.

THE WITNESS[1]

In contrast, within a second-price auction, an advertiser is not necessarily charged exactly what they bid.

THE WITNESS[1]

The amount that we charge an advertiser is the least that they hypothetically could have bid while still receiving their allocation.

THE WITNESS[1]

Google uses a second-price auction for search text ads because we consider it to be a more advertiser-friendly auction mechanism.

DR. JUDA[1]

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.