Discover Pandipedia

Pandipedia is the world's first encyclopaedia of machine generated content approved by humans. You can contribute by simply searching and clicking/tapping on "Add To Pandipedia" in the answer you like. Learn More

Expand the world's knowledge as you search and help others. Go you!

Overview of Search Indexing and User Experience

Google’s search system relies heavily on a comprehensive index and robust evaluation metrics to deliver high-quality results. The search engine uses an algorithmic process similar to an index at the back of a book, where every significant term corresponds to the pages on which it appears. Without a clear and comprehensive index, useful documents remain unreachable for users, which directly impacts the quality of search results[1]. Additionally, Google continuously crawls the web to acquire new content, although there have been periods where the overall number of documents in the index has shrunk due to factors such as increasing document sizes and improved understanding of content. Another critical factor is how search results are displayed on different platforms. For instance, mobile devices, with their limited screen space, influence how results are prioritized and rendered compared to desktop systems. Mobile searches, which tend to carry more location-specific intent, necessitate a customized approach that acknowledges both the physical constraints and the user’s preferences[1].

Advances in Machine Learning and Their Impact on Search

Google’s evolution from hand-developed ranking systems to state-of-the-art machine learning models has been a game changer in improving search relevance. Early reliance on manually defined algorithms gradually gave way to sophisticated neural networks such as RankBrain, BERT, T5, and LaMDA. RankBrain was a significant milestone that helped process the intricate links between web pages, and subsequent models have enhanced the system’s understanding of natural language queries[1]. These developments have allowed Google to better capture the nuances of human language and deliver results that more accurately reflect what users are seeking. This progression not only supports traditional search outcomes but also enhances dynamic search features such as images, videos, and answer panels that contribute to the overall user experience.

Evaluation and Metrics of Search Quality

To measure and improve search quality, Google employs specific metrics such as Information Satisfaction (IS) and Page Quality (PQ). These metrics are designed to evaluate both the overall reliability of search results and the accuracy of individual pages. The use of human raters, who adhere to established guidelines, provides critical feedback on the performance of the search system. This comprehensive process involves live experiments where changes are evaluated through side-by-side comparisons and iterative testing. Even subtle shifts in these metrics are monitored closely, as small improvements in IS scores are believed to translate into noticeable enhancements in user satisfaction[1][2].

The Role of User-Side Data in Enhancing Search Quality

User-side data plays a crucial role in refining Google’s search results by offering insights into user behavior and preferences. According to expert testimonies in legal proceedings, such data helps Google understand which pages users click on, how long they stay on a page, and whether they promptly return to the search results. These behavioral signals are instrumental in training algorithms to decide which pages are most relevant and should be kept in the index[2]. Furthermore, the benefits of this data extend to improving spelling corrections and the overall performance of search features. Expert opinions also highlight that experiments reducing user-side data for system training do not fully capture the subtle yet significant improvements observed when such data is employed, especially for long-tail queries[2].

Competitive Dynamics and Search Agreements

Competition in the search market is heavily influenced by strategic agreements with browser and platform providers. These agreements, which often involve setting Google as the default search engine on various browsers and devices, have long been scrutinized for their impact on market competition. Analysis from legal proceedings details how these default settings can create significant barriers to entry for other search providers by reinforcing Google's dominant position. Such arrangements not only affect the competitive process but also generate strong incentives for browser makers to choose a default search engine that they believe offers the best overall performance[3]. The economic reasoning behind these agreements comes into focus when considering that the integration of search functionality in browsers is almost as essential as having tires on a car. Browser defaults offer consumers an out-of-the-box solution and result in price competition among providers, with payments linked to the default status contributing to lower device costs and enhanced service revenue[3].

Market Power and Its Impact on Advertising

Apart from search quality, Google’s market dominance is also reflected in its advertising practices. Legal arguments presented in court highlight that Google has maintained substantial market power across multiple dimensions: general search services, search text advertising, and broad search advertising with specific emphasis on the United States market. The use of exclusive default agreements, whereby Google is pre-installed or set as the default search option, contributes significantly to its stronghold in the market. This practice effectively prevents rivals from gaining traction and forces advertisers, particularly specialized vertical providers, to rely on Google’s services despite increased advertising costs[4]. Expert testimonies further suggest that the rise in customer acquisition costs among advertisers is a direct consequence of these practices. While such exclusive agreements may foster some degree of price competition related to the default status, they ultimately entrench Google’s dominance, limiting the scope for competitors to challenge its market position[4][3].

Conclusions and Ongoing Developments

The insights derived from the various sources present a comprehensive picture of both the technical and competitive aspects of Google’s search ecosystem. The use of an expansive index, underpinned by continuous crawling and robust user data analysis, has been critical to maintaining high search quality. Simultaneously, the integration of advanced machine learning models has significantly enhanced the system’s ability to interpret and respond to user queries. However, these advancements are set against a backdrop of intense market competition where default search agreements and strategic partnerships with browsers and platforms play a dominant role. These arrangements not only provide convenience to users but also contribute to sustaining Google’s market power in the search and advertising domains. Despite the improvements in search quality and user experience achieved through refined metrics and live experiments, challenges persist from both a competition and regulatory perspective, as evidenced by ongoing legal debates and expert critiques[1][2][3][4].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

The Autobiography of a Yogi by Paramahansa Yogananda is a spiritual classic first published in 1946. It recounts Yogananda's life, his search for his guru, and teachings on Kriya Yoga, ultimately introducing many to meditation and yoga. The book has been influential in both Eastern and Western spiritual circles and has been translated into over fifty languages. Notable admirers include Steve Jobs and George Harrison, both of whom credited the book with profoundly impacting their lives[1].

Yogananda, originally Mukunda Lal Ghosh, was born in Gorakhpur, India. His narrative includes significant encounters with various spiritual figures and details his journey to America, where he established the Self-Realization Fellowship and shared his teachings widely[1]. In 1999, the book was recognized as one of the '100 Most Important Spiritual Books of the 20th Century'[1].

The text does not contain an answer regarding content from the sources[2] and[3].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Get more accurate answers with Super Search, upload files, personalised discovery feed, save searches and contribute to the PandiPedia.

Decluttering your space has become a prominent strategy for improving mental health and overall well-being. Research shows that our physical environment has a profound effect on our psychological state. Clutter can exacerbate feelings of anxiety, stress, and lack of control, while a tidy space can promote calmness and productivity.

The Psychological Burden of Clutter

:max_bytes(150000):strip_icc()/decluttering-our-house-to-cleanse-our-minds-5101511_final1-52c069e7a52046209468e9ddd1558f43.png)

Living in clutter can significantly impact mental health. Studies indicate that disorganized environments are associated with increased levels of the stress hormone cortisol and a higher likelihood of experiencing mood disorders such as depression and anxiety. Clutter often serves as a constant reminder of unfinished tasks, contributing to an overwhelming sense of cognitive overload that can drain energy and focus ([1][10][11]).

Moreover, the chaos typically associated with clutter tends to limit one’s ability to process information effectively. As noted in various studies, individuals working in cluttered spaces often find it difficult to concentrate, leading to procrastination and reduced productivity. This cognitive distraction can deepen feelings of frustration and helplessness, creating a vicious cycle where disorganization worsens mental health, and deteriorating mental health makes it harder to declutter ([2][3][4][5][10]).

Decluttering as a Therapeutic Practice

:max_bytes(150000):strip_icc()/Stocksy_txp0c6d6160YHf300_Medium_4561264-bdb32f29283f4516b1c18c8b73244c10.jpg)

Decluttering serves not only as a means to tidy a physical space but also as a method to regain control over one’s environment and reduce emotional distress. The act of sorting through belongings provides a sense of accomplishment and effectively organizes both external and internal chaos. By making decisions about what to keep or let go, individuals experience a form of empowerment, improving self-esteem and fostering a sense of agency in their lives ([2][4][9]).

In fact, many people report that decluttering leads to an immediate emotional uplift, as it often releases feelings of guilt and embarrassment associated with unkempt spaces. The therapeutic aspect of decluttering is supported by the satisfaction and relief that comes with creating a clean, organized environment. This process can be psychologically liberating, as it allows individuals to shed not just physical items but also the associated emotional burdens ([6][9][10][11]).

Cognitive and Emotional Benefits

Research indicates that a clean and organized environment correlates with improved focus and cognitive clarity. The push for organization minimizes distractions, allowing individuals to concentrate more effectively on tasks at hand. As clutter decreases, so does cognitive overload, leading to clearer thinking and enhanced problem-solving capabilities ([5][8]).

Additionally, a tidy home can contribute to better relationships. Clutter can be a source of tension among family members or roommates, leading to conflicts or feelings of inadequacy when socializing. A well-organized living space allows individuals to feel more comfortable inviting friends over, facilitating social interactions that have positive ramifications for mental health ([1][6][10]).

Practical Strategies for Decluttering

While the act of decluttering may seem daunting, many strategies can help make the process manageable and effective. Starting small by designating specific areas, like a single room or even a drawer, can create a sense of progress without overwhelming feelings of inadequacy. Setting achievable goals for each decluttering session not only simplifies the task but also builds motivation as individuals see their space transform into a more serene environment ([3][6][9][11]).

Engaging family members or friends in the decluttering process can also alleviate the mental burden of tackling clutter alone. Collaborative efforts promote shared responsibility and may even strengthen relationships through a sense of teamwork. Additionally, creating a giveaway or disposal plan can lessen the emotional weight of parting with belongings, facilitating a more emotional and psychological release ([5][7][9][11]).

Long-term Mindfulness and Maintenance

Incorporating mindfulness into the decluttering process is essential for sustaining the long-term benefits of an organized space. Mindful decluttering requires intentional decisions about what to keep, fostering awareness around belongings and their significance. Practicing the 'one-in, one-out' rule—whereby one item is discarded or donated for every new item brought into the home—encourages a continuous evaluation of physical possessions and reduces the likelihood of future clutter buildup ([6][9][10][11]).

Furthermore, regular maintenance routines, such as daily tidying sessions, can prevent clutter from accumulating over time and help individuals maintain the mental clarity gained through decluttering. A well-organized environment not only enhances mood but also contributes significantly to overall emotional well-being ([2][3][6][8]).

In conclusion, decluttering is not merely about physical cleanliness; it is about taking control of your mental environment. By reducing clutter, individuals can experience improved psychological health, greater focus, and an enhanced quality of life.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Recent advancements in artificial intelligence have led to significant developments across various sectors as we approach the end of 2024. Here are some highlights:

AI Integration and Use Cases: A Forbes article discusses the prominent use cases of AI in 2024, showcasing applications such as search engines like Perplexity.AI, CV enhancement tools, grammar checkers, and virtual assistants. These use cases emphasize task-oriented functions that free up time for high-value activities requiring human creativity and judgment. The article also points to the trend of personalized AI agents that handle routine tasks, projected to enhance productivity in both personal and professional settings[1].

Generative AI Evolution: As generative AI continues to evolve, a focus is on creating multimodal systems that can understand and generate content across different formats, such as text, audio, and video. This capability isn't just about fun applications; it’s also seen in practical areas like healthcare, where AI helps analyze medical data and improve diagnostics[9]. In media, AI is transforming filmmaking processes, offering new tools for content generation and special effects[11].

AI Regulation Initiatives: The landscape of AI regulation is rapidly developing. The EU's AI Act aims to set standards for AI use and ensure transparency, particularly concerning high-risk applications. Similarly, the U.S. is taking steps with executive orders intended to oversee AI safety and efficacy, leading to a wave of compliance measures businesses must consider[9][11].

Emerging AI Technologies: Innovations include specialized AI systems tailored to specific organizational needs, enhancing sectors like finance, healthcare, and retail. The rise of 'agentic AI,' which performs tasks autonomously and proactively, marks a shift from reactive to proactive AI systems capable of handling complex workflows without direct human oversight[8][9].

Creative Applications and Ethical Considerations: While the effectiveness of generative AI tools is being lauded, concerns regarding misinformation via deepfake technology and ethical implications of AI-generated content are growing. Companies are addressing these challenges by developing technologies to detect AI-generated content and ensuring ethical standards in AI development and deployment[9][11].

These trends indicate that 2024 is shaping up to be a pivotal year for AI, with an emphasis on integration, innovation, and the balancing act of ethics and regulation amidst rapid technological changes.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

:max_bytes(150000):strip_icc()/Easy-workouts-for-beginners-3496020-FINAL-a0968ffe619d4933ae66d841e70f7387.gif)

Beginning an exercise routine at home can be a simple yet effective way to improve your health and fitness. Here are several tips and insights for beginners looking to make this journey more manageable and enjoyable.

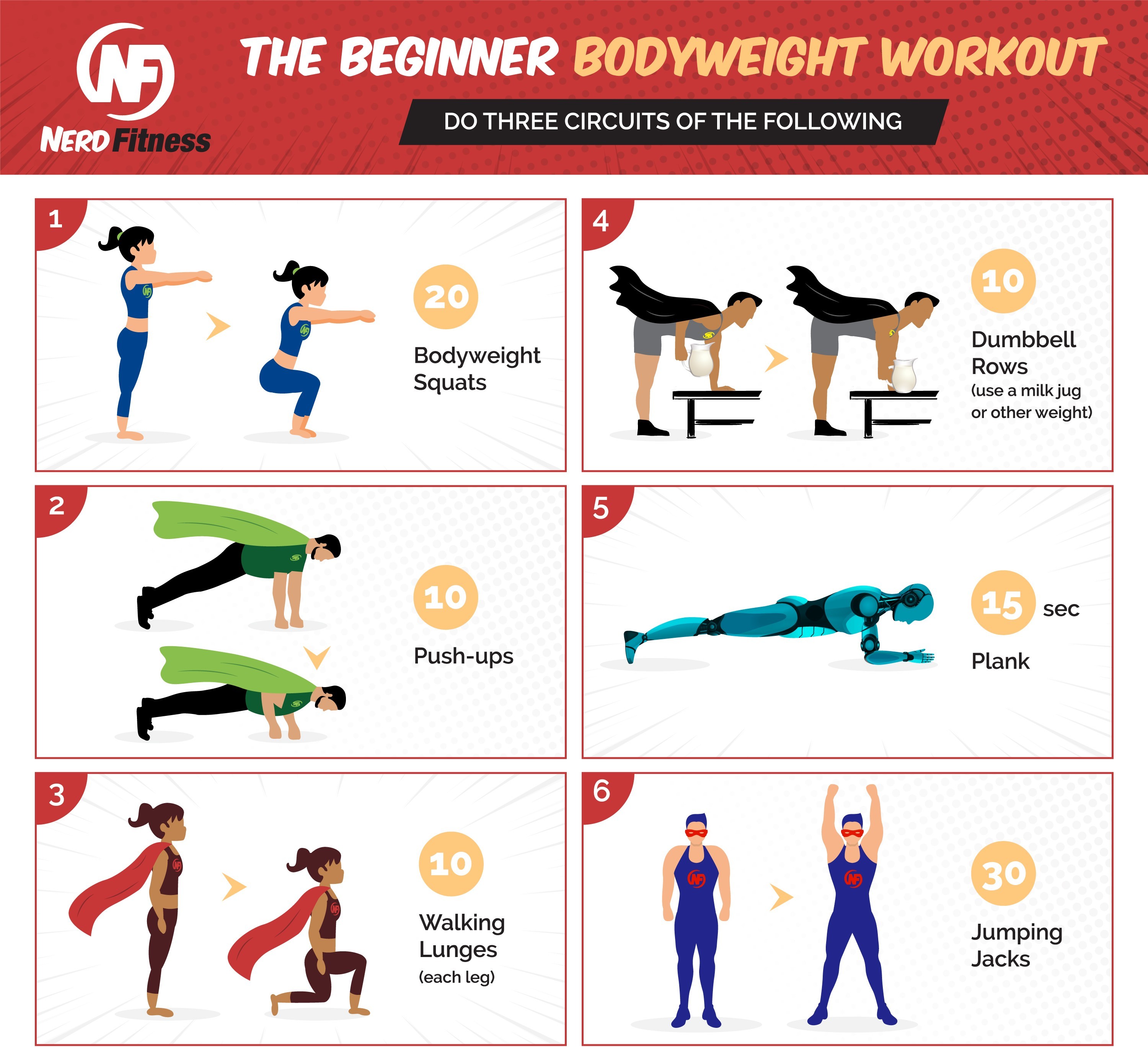

Choose Simple Workouts

You don't need to engage in long or complex workouts to see benefits. Beginner workouts can yield real results without requiring expensive equipment or elaborate plans. Simple exercises that utilize your body weight can effectively improve fitness, boost confidence, and increase energy levels. Starting with just a few minutes of exercise daily can lead to noticeable improvements in your fitness over time[1][4].

Consult Your Healthcare Provider

Before starting any new exercise program, especially if you have a prior health condition such as diabetes or heart issues, it's essential to consult with a healthcare provider. They can help you understand necessary modifications to ensure safety during your workouts[1].

Set Manageable Goals

Establishing a clear, attainable goal is crucial. Consider formulating a SMART goal—one that is specific, measurable, attainable, relevant, and time-bound. Writing it down and placing it where you can see it regularly will help keep you accountable and committed to your fitness journey[1].

Incorporate Everyday Movements

Everyday activities can be used to create a workout. For instance, movements like getting in and out of a chair or walking up and down stairs can improve strength, balance, and flexibility[1]. These activities can be repeated for a quick, effective workout session that fits seamlessly into your daily life.

Home Circuit Workout

Once comfortable with basic movements, you can create a simple circuit workout at home. Combine exercises like:

Chair Sit-to-Stand: Repeatedly sit down and stand up from a chair.

Stair Walking: Go up and down stairs for cardiovascular and strength benefits.

Floor Transitions: Practice getting up and down from the floor to enhance coordination and strength.

Doing each movement several times in a sequence can emulate a structured workout without requiring a gym visit[1][3].

Maximize Household Chores

You can easily turn household chores into a workout. Activities like sweeping, vacuuming, or even gardening can increase your heart rate and engage various muscle groups. For example, sweeping utilizes your core and oblique muscles, making it a great way to sneak in exercise while accomplishing chores[1][3].

Bodyweight and Flexibility Exercises

Bodyweight exercises are especially beneficial as they require no equipment and can be modified to various skill levels. Some easy and effective options include:

Bridges: Lie on your back with knees bent, and lift your hips to engage the glutes.

Modified Push-ups: Begin on your knees and lower your body toward the floor to build upper body strength.

Squats: Start with your back against a wall or a chair to master your form before progressing to freestanding squats.

These exercises can be scaled in intensity as fitness progresses. Aim for two to four times a week, performing 10 to 15 repetitions of each exercise with breaks in between[3][4].

Warm-Up and Cool Down

Always incorporate a warm-up and cool-down routine. Dynamic warm-ups can include simple movements like jogging in place, jumping jacks, or arm circles to prepare your body for exercise and prevent injury. Post-workout stretching is equally important to aid recovery and improve flexibility[2][3].

Social Support and Community

Engaging family and friends in your fitness journey can provide motivation and support. Sharing your goals with others enhances accountability, making it easier to stick to the program. Additionally, consider online communities for tips, workout plans, and encouragement from like-minded individuals[1][4].

Stay Consistent and Progress Gradually

Listen to your body and allow for rest days, as strength is built during recovery periods. Follow a pattern such as strength training followed by cardio or flexibility work on alternating days to maintain a balanced routine[2][4]. Progress should be gradual; as you grow more comfortable with basic movements, start to increase repetitions or introduce more challenging variations.

In summary, starting an exercise routine at home can be straightforward. By focusing on simple movements, setting realistic goals, and consistently engaging in various exercises, beginners can successfully enhance their fitness and overall well-being. With determination and the right approach, anyone can enjoy the journey to better health from the comfort of their home.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Introduction to Variational Lossy Autoencoders

Variational autoencoders (VAEs) are a powerful class of generative models that are designed to learn representations of data in a way that is amenable to downstream tasks like classification. However, the introduction of a new method called Variational Lossy Autoencoder (VLAE) offers a novel perspective by leveraging the concept of lossy encoding to improve representation learning and density estimation.

What is a Variational Lossy Autoencoder?

The core idea behind a VLAE is to combine the strengths of variational inference with the lossy encoding properties of certain types of autoencoders. In essence, traditional VAEs aim to reconstruct data as accurately as possible, often leading to overly complex representations that capture noise rather than relevant features. In contrast, VLAEs intentionally embrace a lossy approach, aiming to retain the essential structure of the data while discarding unnecessary details. This is particularly useful when considering high-dimensional data, such as images, where the goal is not always precise reconstruction but rather capturing the most relevant information[1].

Representation Learning and Density Estimation

VLAEs facilitate representation learning by focusing on the components of the data that are most salient for downstream tasks. For instance, a good representation can be essential for image classification, where capturing the overall shape and visual structure is often more important than faithfully reconstructing each pixel[1].

The authors propose a method that integrates autoregressive models with VAEs, enhancing generative modeling performance. By explicitly controlling what information is retained or discarded, VLAEs can potentially achieve better performance on various tasks compared to traditional VAEs, which tend to preserve too much data[1].

Mechanism of Variational Lossy Autoencoders

In a typical VLAE setup, the model incorporates a global latent code along with an autogressive decoder which models the conditional distribution of the data[1]. This approach helps in efficiently utilizing the latent variable framework. The authors note that previous applications of VAEs often neglected the latent variables, which led to suboptimal representations. By using a simple yet effective decoding strategy, VLAEs can ensure that learned representations are both efficient and informative, striking a balance between accuracy and complexity[1].

Technical Background

The architecture of a VLAE generally builds upon traditional VAE models but introduces innovations to address the shortcomings of standard approaches. For example, the model can be structured to ensure that certain aspects of information are retained while others are discarded, facilitating a better understanding of how to learn from data without overfitting to noise. The VLAE also leverages sophisticated statistical techniques to optimize its variational inference mechanism, making it a versatile tool in the generative modeling arsenal[1].

Results and Application

Experimental results from applying VLAEs to datasets like MNIST and CIFAR-10 demonstrate promising outcomes. For instance, when employing VLAEs on binarized MNIST, the model outperformed conventional VAEs by using an AF prior instead of the IAF posterior, highlighting its ability to learn nuanced representations without losing critical information. The authors present statistical evidence showing that VLAEs achieve state-of-the-art results across various benchmarks[1].

Lossy Compression Demonstrated

The authors emphasize the effectiveness of VLAEs in compression tasks. By focusing on lossy representations, the VLAE is capable of generating high-quality reconstructions that retain meaningful features while disregarding less relevant data. In experiments, the lossy codes generated by VLAEs were shown to maintain consistency with the original data structure, suggesting that even in a lossy context, useful information can still be preserved[1].

Comparison with Traditional VAEs

A notable distinction between VLAEs and traditional VAEs lies in their approach to latent variables. In traditional VAEs, the latent space is usually optimized for exact reconstruction. In contrast, VLAEs allow for a more flexible interpretation of the latent variables, encouraging the model to adaptively determine the importance of certain features based on the task at hand, rather than strictly interpreting all latent codes as equally important[1].

This flexibility in VLAEs not only enhances their performance for specific tasks like classification but also improves their capabilities in more general applications, such as anomaly detection and generative art, where the preservation of structural integrity is crucial[1].

Conclusion

Variational Lossy Autoencoders represent a significant advancement in the field of generative modeling. By prioritizing the learning of structured representations and embracing lossy encoding, VLAEs provide a promising pathway for improved performance in various machine learning tasks. The integration of autoregressive models with traditional VAEs not only refines the representation learning process but also enhances density estimation capabilities. As models continue to evolve, VLAEs stand out as a compelling option for researchers and practitioners looking to leverage the strengths of variational inference in practical applications[1].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Get more accurate answers with Super Search, upload files, personalised discovery feed, save searches and contribute to the PandiPedia.

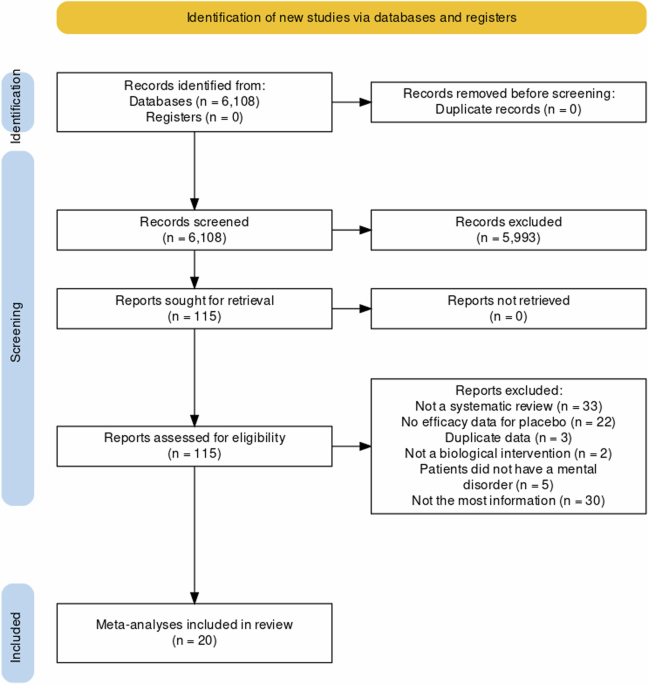

The placebo effect, a phenomenon where patients experience real improvements in their symptoms after receiving a treatment that has no therapeutic value, has intrigued scientists and clinicians for decades. This report provides an in-depth look at how placebo effects work in medicine, drawing exclusively from available texts[1][2][3][4][5][6].

Mechanisms of the Placebo Effect

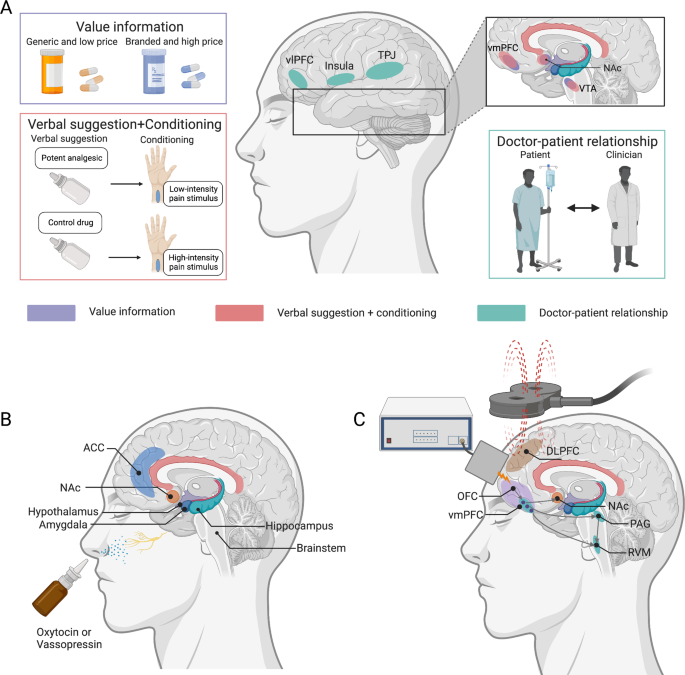

Psychosocial and Expectation-Based Mechanisms

Placebo effects arise primarily from the psychosocial context and the expectations of the patient. Positive outcomes are often linked to the patient's anticipation of relief, which in turn activates various neurobiological pathways[1]. When a patient expects pain relief, their cognitive and emotional circuits are engaged, resulting in the release of endogenous opioids and other substances that modulate the sensation of pain[1]. This expectation-driven activation involves the dopaminergic, opioidergic, vasopressinergic, and endocannabinoidergic systems, which are crucial for the placebo-induced benefits observed in pain management and other symptoms[1].

Behavioral Conditioning

Conditioning is another significant mechanism behind placebo effects. Classical conditioning involves pairing a neutral stimulus with an active treatment, leading to the neutral stimulus eventually eliciting the same response as the active treatment. Studies have shown that placebo effects can be conditioned to mimic the action of medications, such as immunosuppressive drugs, by using a novel taste as a conditioned stimulus[1][3].

Perception and Mindsets

Patients’ mindsets, which are shaped by various factors including societal norms and prior experiences, play a pivotal role in the effectiveness of treatments. For instance, mindsets about the capacity to change and the efficacy of treatments can significantly influence health outcomes. In a study where room attendants were informed that their work was equivalent to exercise, those informed showed significant health improvements despite no actual changes in their physical activities, indicating the profound impact of mindsets on health behaviors and outcomes[3].

Neurobiological Underpinnings

Neural Circuitry

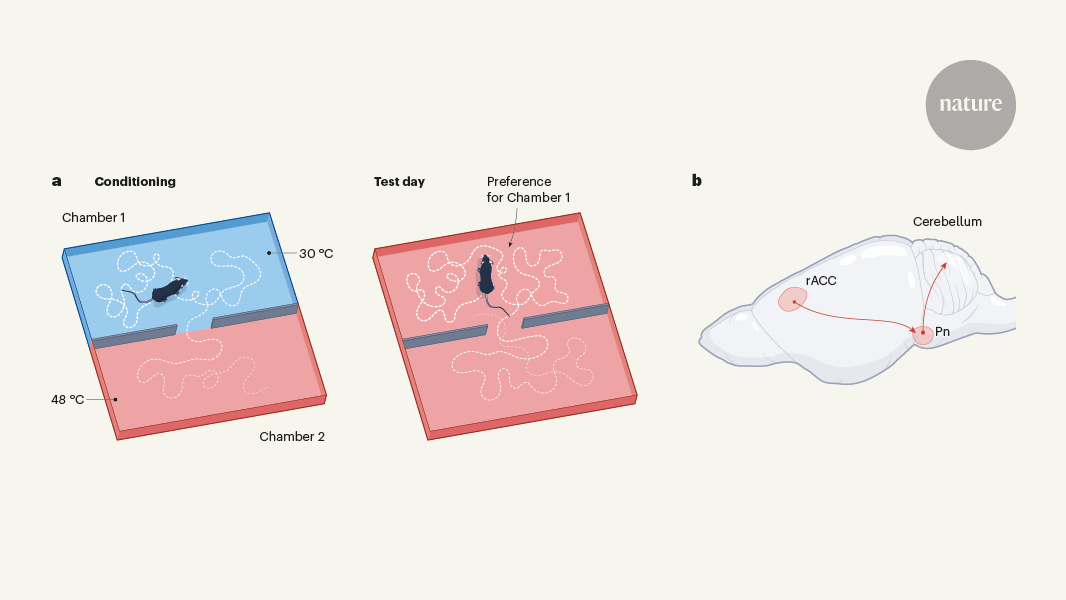

Recent studies have identified specific brain regions involved in placebo effects. For example, research on placebo pain relief using mice has pinpointed neurons in the limbic system that mediate the expectation of pain relief and send signals to the brainstem and cerebellum, regions typically associated with movement coordination[4]. This unexpected finding highlights the complexity and breadth of neural mechanisms involved in placebo effects.

Reward and Pain Modulation Systems

The placebo effect also engages the brain’s reward and pain modulation systems. The descending pain modulatory system (DPMS) and the reward system are key players. The DPMS includes regions like the cingulate cortex and prefrontal cortex, projecting to the periaqueductal gray and rostral ventromedial medulla, which are involved in pain inhibition[5]. The reward system, involving the nucleus accumbens and cerebro-dopaminergic pathways, plays a crucial role in how expectations influence pain perception and overall treatment outcomes[5].

Placebo Effects in Clinical Practice

Application and Challenges

Clinicians have long leveraged placebo effects, sometimes using treatments like “bread pills” or colored water to produce subjective improvements in patients[3]. The effect of placebos is not limited to deceptive administration. Open-label placebos, where patients are aware they are receiving a placebo, still produce significant health benefits[1][6].

In clinical trials, placebo effects pose a challenge as they can confound the assessment of a drug’s efficacy. Measures like placebo run-in periods, where patients initially receive a placebo without their knowledge, have been used to minimize these confounding effects, although their impact on trial outcomes varies[2].

Ethical Considerations

The ethical use of placebos in clinical settings remains contentious. While placebo effects can significantly improve patient outcomes, using deceptive methods to administer placebos raises ethical concerns. Thus, open-label placebos and dose-extending placebos, which combine real medications with periods of placebo administration, are considered more ethically sound alternatives[1][5].

Future Directions

Personalized Medicine and Placebo Responsiveness

Future research aims to better understand the individual variability in placebo responsiveness. Factors such as genetic predispositions, psychological traits, and prior treatment experiences influence how patients respond to placebo treatments[1][3][5]. Advancements in neuroimaging and computational modeling are expected to further elucidate these individual differences, paving the way for more personalized medical interventions that maximize therapeutic outcomes by enhancing placebo effects.

Integrating New Technologies

Non-invasive brain stimulation techniques like transcranial magnetic stimulation (TMS) and transcranial direct current stimulation (tDCS) are being explored to modulate placebo responses. By targeting specific brain regions involved in expectation and pain processing, these techniques have the potential to enhance placebo analgesia and other beneficial placebo responses in clinical settings[5].

Conclusion

The placebo effect, once seen as a mere nuisance in clinical trials, is now recognized as a potent therapeutic agent. Understanding its mechanisms—ranging from psychosocial factors and conditioning to complex neurobiological pathways—offers valuable insights into enhancing patient outcomes. As research continues to unravel the intricacies of placebo effects, integrating these findings into routine clinical practice presents both opportunities and ethical challenges that must be carefully navigated.

By incorporating psychosocial, pharmacological, and neuromodulation strategies, healthcare providers can potentially harness the power of placebos to improve therapeutic outcomes while maintaining ethical standards. The future of placebo research promises to refine these applications further, contributing to the advancement of personalized and effective medical care.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Are these models capable of generalizable reasoning or are they leveraging different forms of pattern matching

Unknown[1]

Despite their sophisticated self-reflection mechanisms, these models fail to develop generalizable reasoning capabilities beyond certain complexity thresholds.

Unknown[1]

Existing evaluations predominantly focus on established mathematical and coding benchmarks, which... do not provide insights into the structure and quality of reasoning traces.

Unknown[1]

This study investigates the reasoning mechanisms of frontier LRMs through the lens of problem complexity.

Unknown[1]

Current evaluations primarily focus on established mathematical and coding benchmarks, emphasizing final answer accuracy.

Unknown[1]

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.

Yes, the quality-adjusted prices have gone down. Some adjustments aimed to allow Google to share in the value generated, indicating that as the value increased, quality-adjusted prices went down. Additionally, a proposal was made to reduce the revenue share percentage to facilitate funding for other objectives[3][2].

Let's look at alternatives:

- Modify the query.

- Start a new thread.

- Remove sources (if manually added).

- Request a manual search from our human research team.